Ken Hayworth 对麻省理工学院技术评论文章的个人回应

This is my rebuttal to the recent MIT Technology Review article by Michael Hendricks. The views expressed here are mine alone and should not be taken as an official statement from the BPF which is an organization with a diverse range of opinions but a common goal to advocate more scientific research into brain preservation…

Dear Michael,

As a neuroscientist who was heavily quoted in the NYT article I feel compelled to rebut some of your points. First off, please do not conflate what a small, highly-suspect company like Alcor is offering with what is possible in principle if the scientific and medical community were to start research in earnest. I started the Brain Preservation Prize as a challenge to Alcor and other such companies to ‘put up or shut up’, challenging them to show that their methods preserve the synaptic circuitry of the brain. After five years they have been unable to meet our prize requirements even when their methods were tested (by a third party) under ideal laboratory conditions. Out of respect for loved ones I will not comment on any particular case, but it is clear from online case reports that their actual results are often far worse than the laboratory prepared tissue we imaged. Speaking personally, I wish that all such companies would stop offering services until, at a minimum, they demonstrate in an animal model that their methods and procedures are effective at preserving ultrastructure across the entire brain. By offering unproven brain preservation methods for a fee they are effectively making it impossible for mainstream scientists to engage in civil discussion on the topic.

Unlike you however, I do think that cryonics and other brain preservation methods are worthy of serious scientific research today. Since it was invented in the 1960’s, the argument against cryonics has been that it (to quote the skeptic Michael Shermer) turns your brain to “mush”. This was certainly the case in the 1960’s but I wondered whether it was still the case fifty years later so I put forward my skeptical challenge not only to the cryonics community but to the scientific community as well, and I consider the results encouraging so far.

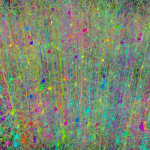

First off, the cryobiology research laboratory 21st Century Medicine has published papers (1, 2) showing that half millimeter thick rat and rabbit hippocampal slices can be loaded with cryoprotectant, vitrified solid at -130 degrees C, stored for months, rewarmed, washed free of cryoprotectant, and still show electrophysiological viability and long term synaptic potentiation. They have so far been unable to demonstrate such results for an intact rodent brain –unlike the in vitro slice preparation, perfusing the cryoprotectant through the brain’s vasculature results in osmotic dehydration of the tissue. However, this same research group now has a paper in press showing that such osmotic dehydration can be avoided if the brain’s vasculature is perfused with glutaraldehyde prior to cryoprotectant solution. Their paper reports high quality ultrastructure preservation across whole intact rabbit and pig brains even after being stored below -130 degrees C. I have personally acquired 10x10x10nm resolution FIB-SEM stacks from regions of these “Aldehyde Stabilized Cryopreserved” brains and have verified traceability of the neuronal processes and crispness of synaptic details. Considering these two results together, it seems at least plausible that further research might uncover a way to avoid osmotic dehydration without the need to resort to fixative perfusion, resulting in an intact brain as well preserved as the viable hippocampal slices.

Even if glutaraldehyde remains a necessity, this Aldehyde Stabilized Cryopreservation process appears capable of preserving the structural details of synaptic connectivity (the connectome) of an entire large mammalian brain in a state (vitrified solid at -130 degrees C) that could last unchanged for centuries. Shawn Mikula, another researcher competing for the brain preservation prize, has demonstrated a method for staining an entire intact mouse brain allowing it to be imaged at high resolution with 3D electron microscopy, and other researchers have begun to show how to perform such imaging in parallel, dividing the brain up into pieces and imaging the individual pieces with ultrafast 61 beam scanning electron microscopes.

To summarize, it looks like as of 2015 we may finally have a method (Aldehyde Stabilized Cryopreservation) that can demonstrably preserve the synaptic connectivity of a brain over centuries of storage. This should at least put the “mush” argument to rest. And we are beginning to see a plausible path for how such a brain’s connectome might be mapped in the future. So at the very least, the question of whether a connectome map is sufficient to “restore a person’s mind, memories, and personality by uploading it into a computer simulation” is no longer purely academic.

So let’s dive in…

You state: “Science tells us that a map of connections is not sufficient to simulate, let alone replicate, a nervous system…”

Really? Science tells you this is “impossible” because you have failed to do so in your worm studies so far?

You state: “I study a small roundworm, Caenorhabditis elegans, which is by far the best-described animal in all of biology. We know all of its genes and all of its cells (a little over 1,000). We know the identity and complete synaptic connectivity of its 302 neurons, and we have known it for 30 years. If we could “upload” or roughly simulate any brain, it should be that of C. elegans.”

This is incredibly misleading. The reason that the nervous system of C. elegans is difficult to ‘simulate’ today is that not enough is known about the functional properties of its neuronal types and synapses. The argument for future uploading assumes such knowledge will be completely known from decades of ‘side’ experiments. Are you saying that even if every detail of electrophysiology were known for the general C. elegans that one could still not interpret the structural connectome of a particular C. elegans to determine if it has or has not learned an olfactory avoidance task? Even if you suspect this, where is your evidence?

You state: “The presence or absence of a synapse, which is all that current connectomics methods tell us, suggests that a possible functional relationship between two neurons exists, but little or nothing about the nature of this relationship—precisely what you need to know to simulate it.”

Really? Little or nothing is known about the nature of the photoreceptor to bipolar cell synapse in the mammalian retina? Little or nothing is known about the bipolar to ganglion cell synapses? We may not know everything about these retinal cells and synapses but we know enough to have had “simulations” of retinas for two decades. Not based on the EM-level connectome directly but based on the statistics of connectivity as gleaned from coarser mappings. Do you really suspect that we would not be able to tell whether a particular retinal ganglion cell has an on-center or off-center receptive field based on the EM-level connectome alone? The textbooks and recent retinal connectomic studies argue otherwise.

Is little or nothing known about the synapses made by thalamic projections to the visual cortex? About the synapses made by spiny stellate cells and pyramidal cells in cortex? Textbooks say these are all glutamatergic and excitatory. Do you really suspect that it would be impossible, in principle, to determine the receptive field orientation of a cortical simple cell if you had a complete connectome? If you do you should tell the researchers at the Allen Institute since they are planning to do just that.

But maybe you say a simulation is more than just determining receptive fields. The success of deep learning convolutional neural networks argues against this. In a sense these neural networks are “simulations” of the visual cortex and they are very successful now at learning to recognize objects. These neural networks show directly how complex information can be encoded in a connectome, one receptive field at a time. If I took a Google deep learning network and read off its connection weights I could “copy” and “upload” it to another neural network simulation even if the individual connections strengths were determined only imprecisely. The textbooks say similar hierarchical networks of feature detecting cells underlie our own visual system.

By the way, you certainly get more than the “presence or absence of a synapse” from modern EM reconstructions. Check out this paper to see just how much information on synapse strength can be gleaned from 3D EM images alone.

I am certainly not saying that we now know everything about how the brain works, but I am saying that there is more than enough reason to suspect that the structural connectome may be sufficient to successfully simulate a brain given the depth of neuroscience knowledge we should possess by the year 2100 or 2200. Dismissing that as even a possibility hundreds of years in the future based on your failed attempts at understanding some particulars of C. elegans nervous system today seems very shortsighted. If you have real theoretical arguments then present them.

You state: “Finally, would an upload really be you? …But what is this replica? Is it subjectively “you” or is it a new, separate being? The idea that you can be conscious in two places at the same time defies our intuition. Parsimony suggests that replication will result in two different conscious entities. Simulation, if it were to occur, would result in a new person who is like you but whose conscious experience you don’t have access to.”

It always boggles my mind that smart people continue to fall into this philosophical trap. If we were discussing copying the software and memory of one robot (say R2D2) and putting it into a new robot body would we be philosophically concerned about whether it was the ‘same’ robot? Of course not, just as we don’t worry about copying our data and programs from an old laptop to a new one. If we have two laptops with the same data and software do we ask if one can ‘magically’ access the other’s RAM? Of course not.

We are evolved biological robots, period. That is what science really has told us unequivocally. Do you seriously disagree with this? There are no magic molecules in the brain that define us, just computation. Our consciousness is just another type of computation, one that computes a ‘self model’ to assist in intelligently planning our future actions. Such computationalism is the foundational assumption of cognitive science and I would argue of neuroscience as well. There is no room for magic in neuroscience.

You and an exact copy of you wake up in separate but identical rooms. One of the rooms is chosen at random to be blown up. You are saying there is a critical difference whether it is the copy’s room or the original’s. By assumption, this situation is perfectly symmetric from a materialist perspective yet you cling to the idea that there is an overwhelming fundamental difference.

When I hear a neuroscientist making this “identical copy” argument it is like hearing an evolutionary biologist saying that they believe all other animals evolved by natural selection, just not humans. It is like Galileo telling the Inquisition that the planets do revolve around the sun, but don’t worry, we all know that God is the one pushing them.

Seriously, if you are a neuroscientist you need to drop the ‘vitalism’ nonsense. If you want to say that uploading will take more than the structural connectome then say specifically what it will take. But to say uploading is impossible in principle because of this ‘copy problem’ intuition is to turn your back on the computational theory of mind in general.

Finally you state: “No one who has experienced the disbelief of losing a loved one can help but sympathize with someone who pays $80,000 to freeze their brain. But reanimation or simulation is an abjectly false hope that is beyond the promise of technology and is certainly impossible with the frozen, dead tissue offered by the “cryonics” industry. Those who profit from this hope deserve our anger and contempt.”

I personally agree, no one should pay $80,000 to freeze their brain without solid, open, scientifically rigorous evidence that at the very least the connectome is preserved. I would go further and say that regulated medical doctors are the only ones that should be allowed to perform such a procedure.

But I do not agree that research in this area is doomed to failure. Instead the scientific and medical communities should embrace such research following up on the promising brain preservation results I mentioned above. Scientists should work to perfect ever better methods of brain preservation in animal models, and medical researchers should take these protocols and develop them into robust surgical procedures suitable for human patients. Regulators should enforce the highest quality standards for such procedures to be performed by licensed professionals in hospitals. And laws should be changed to allow terminal patients like Kim to take advantage of them prior to cardiac arrest (avoiding weeks of self-starvation and dehydration).

Kim’s story is tragic at least in part because the scientific and medical community let her down. She was a neuroscientist, and one that fully embraced the computational theory of mind. She knew what she wanted -a high quality brain preservation prior to death. I am a neuroscientist that wants the same thing, and there are others. Who are you to say that we are wrong in our beliefs? Who are you to say that the hard-won progress that has already been made toward medical brain preservation should be abandoned?

-Kenneth Hayworth (The opinions expressed here are mine alone.)

Hi Ken,

Thanks for your detailed reply. I don’t think we disagree about most things. I tried to focus on a few substantial barriers–some solvable, some we don’t know–that are usually apparent to biologists but tend to get lost in some more enthusiastic quarters. For example, when there was a robot driven by a model loosely based on a (largely randomly paramaterized) version of the worm connectome, there was a flood of “worm successfully uploaded” coverage in the places you’d expect. In the piece I wrote, I tried to raise some highlight some of these barriers and narrowly criticize commercial services that use this kind of credulous enthusiasm and speculative technology as cover or to confer some kind of legitimacy, because I think some push back from scientists is warranted. I agree with you these services should not be offered at present, but I have no issue with research on freezing/preserving tissue. I don’t think Kim (in Amy’s story) was overly credulous or ill-informed, but I still feel there is an exploitable vulnerability when people are making end of life decisions.

A few specifics:

Your response to my statement that the worm connectome is insufficient to model activity is just making the same points I did, I think. It is in principle possible to simulate it, the connectome is not enough. I think your assertion that learning of an olfactory avoidance task would result in a structural (I assume you mean connectivity?) change to the worm nervous system is an open question but could be wrong. In taste avoidance learning, Ohno et al. showed that learning occurs through a specific insulin receptor isoform translocating to a set of synapses. I’d have to reread the paper, but I don’t think there was evidence that cell or synapse morphology, let alone connectivity patterns, were altered (though the EM level has not been checked as far as I know). http://www.sciencemag.org/content/345/6194/313.long Anyway, this is an open question that does not necessarily have one answer…engrams are probably diverse and many could be non-structural.

I didn’t dismiss any possible future technologies, I just wanted to say that selling something by invoking arbitrarily complex, non-existent technology is shady. As I said on twitter… talking about colonizing Mars is neat and working on it sounds amazing. But anyone selling tickets to Mars in 2015 is full of shit.

Finally, vitalism and your thought experiment: I assume that if a atom-by-atom replica of me were made in another room, neither the copy nor I would be any more real or conscious than the other. As such, I think each each copy would rather not be the one blown up…neither would consider themself equivalent to the other. No vitalism is required, just behavioral adaptations for self-preservation. And though they are super fun, I think that thought experiments requiring layers of counterfactuals to make any sense at all offer poor guidance to what future technology will or won’t do.

Best,

Michael

> I just wanted to say that selling something by invoking arbitrarily complex, non-existent technology is shady

So you’re saying that cryonics organisations should only sell cryonics based upon the re-animation technology we have today? I.e. pretend that technology will never move forward, that the year 2200 will look (technologically) like the year 2000?

This seems like a very strange argument for an intelligent person to make; so much so that I fear I may have misunderstood you.

> talking about colonizing Mars is neat and working on it sounds amazing. But anyone selling tickets to Mars in 2015 is full of shit.

So what do you want cryonics organisations to do – wait till we’ve mastered reanimation (in, like, the year 2500) before they try to preserve anyone? But then there’d be no *point* in preserving anyone – either you’ve cured all diseases, or (worst case) you’d just skip straight to the brain scanning and uploading.

Perhaps you are just saying that cryonics is a priori a non-starter?

The argument would be: if you get to the point where you can prove 100% that it works then it is useless by definition (see above), AND people should never engage in a medical procedure which is not 100% proven to work, ergo you should never engage in cryonics.

Depending on how the freezing is done, the degradation in the tissue may also be repairable with future technology. Cold damage should… be predictable and thus subject to repair if you can get sufficiently good brain scans.

Even if it needs work, it may not need a ton of work to get a decently preserved brain.

“You and an exact copy of you wake up in separate but identical rooms. One of the rooms is chosen at random to be blown up. You are saying there is a critical difference whether it is the copy’s room or the original’s. By assumption, this situation is perfectly symmetric from a materialist perspective yet you cling to the idea that there is an overwhelming fundamental difference. Would you say the same if it was R2D2 and his copy?”

I’m pretty sure that R2D2 would care.

This is a terrible fallacy. You’re conflating “how things appear to any outside observer” to “what’s actually happening”.

As a living being, I don’t particularly care what it looks like to the outside observer. If my room blows up, I’m still quite dead. Whatever copies are made of me are not relevant from my perspective; it doesn’t matter who they are or what they’re doing in my stead, as I’m not around to see them.

Michael, perhaps this will entertain you while you’re waiting for a reply: http://diyhpl.us/~bryan/papers2/brain-emulation-roadmap-report.pdf

No, despite articulated your rebuttal did not convince me.

I think that one problem here is a difference of terminology, and that Hayworth and Hendricks are talking past each other over the definition of the word “connectome”.

I think that Hendricks uses “connectome” to mean a graph of the connections between neurons, where “neuron” is a black box node in the graph, and “connection” is a line connecting two nodes in the graph. I think when Hendricks think of “connectome” he imagines the picture on his homepage showing the connections between the 302 neurons in c. elegans (http://biology.mcgill.ca/faculty/hendricks/), and nothing more.

Hayworth uses “connectome” to mean every single bit of information you can possibly infer from detailed serial electron micrographs through brain matter (assuming complete knowledge of how neurons and brains work “in general”). So for example, in an electron micrograph series you might be able to tell what type of neuron a particular neuron is by looking at its 3D shape, then you would be able to infer from complete information about that neuron type that all of its synapses are excitatory. In this case, Hayworth’s connectome, generated form these electron micrographs, does contain information about the types of molecules present in that neuron’s synapses, because that information can be inferred from the detailed structure of the neuron along with deep knowledge of that general type of neuron won from other experiments.

So Hayworth’s “connectome” is much much more complicated and detailed than Hendricks’ “connectome”, and is based on what you get when you look at electron micrographs and then infer everything you can based on general brain-knowledge. I think Hayworth uses this definition because he is focused on simulation of brains and because he deals with electron micrographs in his work; “connectome” to Hayworth is the ideal state of knowledge you can get from the electron micrographs he works with every day, if you really know what you’re looking at.

When Hendricks says:

“Your response to my statement that the worm connectome is insufficient to model activity is just making the same points I did, I think. It is in principle possible to simulate it, the connectome is not enough. I think your assertion that learning of an olfactory avoidance task would result in a structural (I assume you mean connectivity?) change to the worm nervous system is an open question but could be wrong. In taste avoidance learning, Ohno et al. showed that learning occurs through a specific insulin receptor isoform translocating to a set of synapses. I’d have to reread the paper, but I don’t think there was evidence that cell or synapse morphology, let alone connectivity patterns, were altered (though the EM level has not been checked as far as I know). ”

He’s assuming that Hayworth is expecting that olfactory learning in c. elegans would have to correspond to an actual structural change in the pattern of connections in c. elegans (say neuron #105 looses one of its connections to neuron #220). It certainly seems likely that learning in c. elegans can occur without such gross structural reorganization. But Hayworth is NOT claiming such a thing! When Hayworth says:

“Are you saying that even if every detail of electrophysiology were known for the general C. elegans that one could still not interpret the structural connectome of a particular C. elegans to determine if it has or has not learned an olfactory avoidance task? Even if you suspect this, where is your evidence?”

He’s saying that given his vastly broader definition of “connectome”, can you detect any changes at all in detailed electron micrographs of a c. elegans’ neurons? To say that you can’t means that there will be no difference at all between the detailed electron micrographs of worms that have / haven’t learned the task. It seems less likely to me that this is the case.

When we’re talking about the thing that’s preserved using a Brain Preservation Prize-winning brain preservation method, we’re talking about Hayworth’s connectome, not Hendricks’ connectome, because you can in principle A) get detailed electron micrographs from a preserved brain and B) attain general knowledge about all neuron types in a brain, given enough time.

Hendricks, you said:

“Your response to my statement that the worm connectome is insufficient to model activity is just making the same points I did, I think. It is in principle possible to simulate it, the connectome is not enough. ”

and:

“…engrams are probably diverse and many could be non-structural.”

I would fully agree with you that a Hendricks connectome is not enough to simulate a c. elegans. But, do you think that a Hayworth connectome IS enough to simulate a c. elegans? If not, what detail in particular about the c. elegans do you expect to be able to hide both from a detailed series of electron micrographs and not be inferrable from general knowledge about c. elegans? Do you think that it would be acceptable to offer a service that preserves Hayworth connectomes in humans? If not, what additional preservation criteria beyond a Hayworth connectome would you want before such a preservation procedure would A) not “deserve our anger and contempt” and B) Be likely to actually preserve a person’s brain faithfully enough that a password they had memorized could be extracted from their preserved brain?

sincerely,

–Robert McIntyre

Mr. Hayworth, I tend to agree with every point you made – including that of the computational model of the mind. But as ingrained by evolution, each such computational model – whether original or copy – will generally have an instinct for self-preservation, and each such model will sacrifice the other for preservation of the self – if push comes to shove. So instead of aiming at a copy-paste and creating two identical but separately conscious entities, would it not be even theoretically possible to do a cut-paste of the data that the mind holds? A copy would have all of one’s experience in artificial memory but would it still be the same individual that was born 30 years ago and actually lived the experiences? It is granted that each would be identically sincere in its personality, desire and will to live (or otherwise) as the other – and each would care if they themselves were being blown up. But each would probably care less if the other was set to explode. This is just my view but I think I’d be a little less sad if a copy of my dog died instead of my dog. It would be monstrous not to be sad because both original and copied dog loved me identically. But the original is still where the copy came from. So keeping that intact (speaking of a human brain) is perhaps slightly more pertinent than physically rendering it beyond revival – in order to create a copy. What I’m suggesting is that the copy idea is a noble one and should be pursued, but shouldn’t it be considered a last resort to salvage personality in an identical shadow? Shouldn’t the primary objective be preservation of the original as far as possible (with a copy/neural map as an undesirable but necessary backup in case the original is destroyed)? What I only propose is an approach oriented toward cut rather than copy. I am only a layman and would greatly appreciate your inputs and thoughts on the subject.

Realistically, if there was a complete clone of me (kinda like if I went back a little bit in time and there were two of me), I’d basically consider it another me.

I’ve always thought that would be interesting… You could split goals with another you, and it would basically be you. I wouldn’t even mind another me going out to have fun while I worked, because it was basically me going out.

It’s been 7 years since the last comment here. I wondr if opinions have been changed since then?

If you could go back in time, then you would meet an ‘original’ like yourself. It would not be an artificial vessel with all your experience, i.e, your clone.With your clone it isn’t exactly another you – it is you and a copy with all your experience. While this isn’t a problem with software, I’ll cite an example of how it might create a problem for you. Suppose you have a lot of work to get done but at the same time need to go for your honeymoon. So you create a clone so that it might take care of your work for you, while you go for the honeymoon.

But, once you create the clone you find that it wants you to finish the work while it goes for the honeymoon. Now you both wanna go for the honeymoon and your spouse will flip out trying to figure out which one is the real you. Either way, if both of you are you and you both go for the honeymoon, then no work gets done.

On a serious note though, under current conditions of technology, I wouldn’t hesitate for an instant to have my brain cryopreserved with aldehyde stabilization upon clinical death. I’d rather volunteer for it if I could.

Check out the latest newsletter on cryonics.org

http://dgmedia-design.com/ci_news_nov2015/